一切为了下载垃圾学校放在垃圾超星上的垃圾录播

准备工作

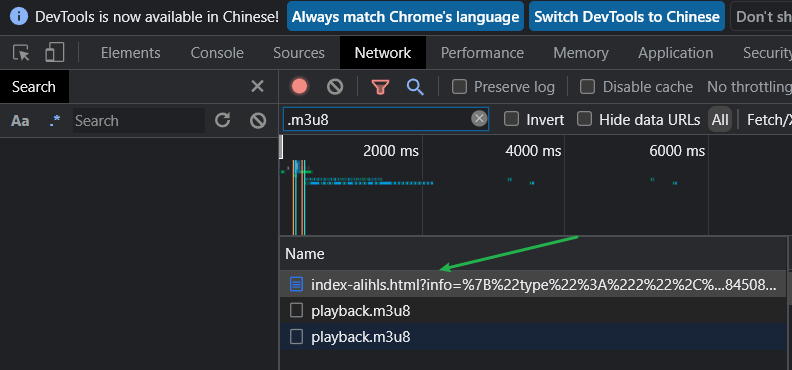

咱先到超星录播f12抓个包, 在network那里搜一下

m3u8

- 下面两个

playback.m3u8就是视频中的一大一小两个视频 - 重点在箭头指向的那个

- 下面两个

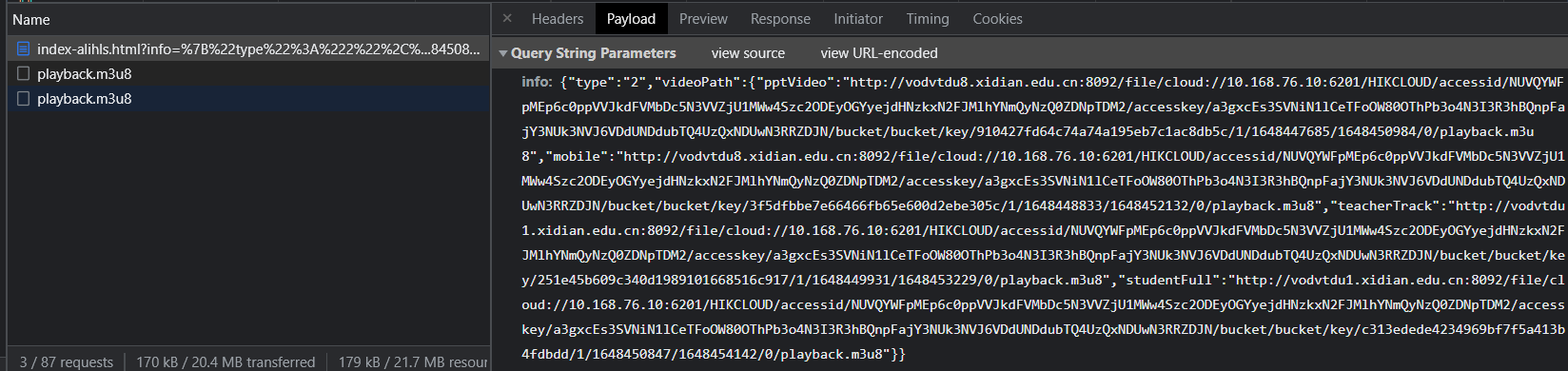

这是个get传参, 将payload复制出来

1

2

3

4

5

6

7

8

9

10{

"type":"2",

"videoPath":

{

"pptVideo":"...",

"mobile":"...",

"teacherTrack":"...",

"studentFull":"..."

}

}- pptVideo: ppt视图(我们所看到的小的那个视频)

- mobile: 这个很神奇,会在teacherTrack和pptVideo之间来回跳转, 害挺智能的(我决定以后都下这个了)

- teacherTrack: 跟踪老师视图(就是我们所看到的大的视频)

- studentFull: 对准学生的监控录像XD

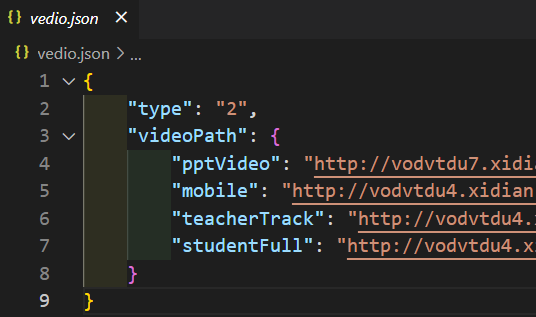

将复制出来的东西放到

vedio.json里

下载

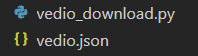

目录结构如下:

下面是 vedio_download.py 的代码, 根据需要修改 var下的变量

- saveName: 保存的文件名

- vedioType: json信息中的4中类型,默认mobile

- threadNumber: 线程数

1 | import requests,json,time,threading,os |